06.12.18 By Amey Manjrekar

We are now generating massive volumes of data at an accelerated rate. To meet business needs, address changing market dynamics as well as improve decision-making, sophisticated analysis of this data from disparate sources is required. The challenge is how to capture, store and model these massive pools of data effectively in relational databases.

Let’s consider a shopping website. There is a need to maintain product information, transaction data as well as product reviews. That data can easily be stored in a relational database management system (RDBMS). But as the number of comments increases, we must alter the table to accommodate the increase. These changes are near-real-time and data modeling becomes very challenging due to the time and resources required to complete these changes. Any changes in the RDBMS schema may also affect the performance of the production database. There can be many scenarios similar to this where changes in the RDBMS schema are required due to the nature and volume of information stored in the database. These challenges can be addressed using toolsets from the Hadoop ecosystem.

Hadoop is a java-based framework designed to tackle the complexities of big data analytics, helping users process and store large volumes of data for using real-time analysis. Hadoop functions by using a set of algorithms to interpret results from big data.

Analyzing data in the production database occurs by querying it, which may result in a degradation of overall database performance. In situations where a lot of data analysis needs to be carried out in production databases, users prefer that the data stored in RDBMS be migrated to the Hadoop ecosystem for better performance. Note that Hadoop cannot replace RDBMS completely as Hadoop is only suitable for online analytical processing (OLAP) and not for online transaction processing (OLTP).

For data migration, one of the best tools available in the Hadoop Ecosystem is Apache Sqoop. Sqoop acts as the intermediate layer between the RDBMS and Hadoop to transfer data. It is used to import data from the relational database such as MySQL / Oracle to Hadoop Distributed File System (HDFS) and export data from the Hadoop file system to relational databases.

In cases where computing resources are limited, Sqoop is not a viable option as it may have high resource consumption. A better option in such cases is to use Apache Spark SQL.

Apache Spark SQL is a module of Apache Spark for working on structured data. It helps to process the data in a quick and distributed manner and is designed to efficiently execute interactive queries and stream processing. It offers benefits of speed, ease of use and a unified processing engine.

Let’s consider a possible scenario where the project stack does not include Hadoop Framework, but the user wants to migrate the data from a RDBMS to HDFS equivalent system, for example, Amazon s3. In this scenario, Apache Spark SQL can be used.

Apache Spark SQL has two types of RDBMS components for such a migration, known as JDBCRDD and JDBCDATAFRAME.

If you need to connect Spark with any RDBMS, then JDBC type 4 driver jar file in /lib directory needs to be added. The following code can be used to check for JDBC connectivity:

The above code will access MySQL database and read all the data from employee table.

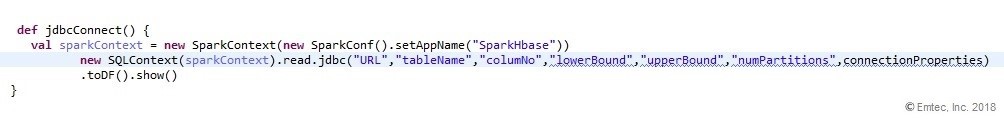

The below-mentioned code can be used to achieve Parallelism by fetching the data:

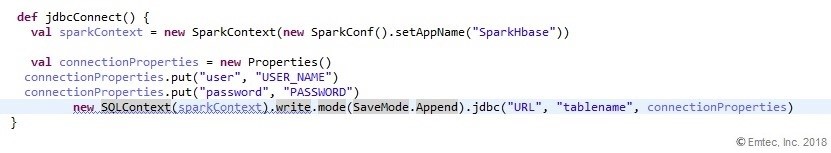

If you want to write data in the database, the following code can be used:

Using the above code snippets, you can import and export data from RDBMS to a HDFS equivalent system.

Sqoop is known to consume a lot of processing power for data migration. Where this is not available, Spark SQL can be considered. There are many considerations when evaluating the tools and methods to migrate, manage and analyze massive amounts of data. If you are considering migration from RDBMS to HDFS, contact our Big Data Consulting experts to get started.